I am often contacted by prospective and actual users of Factorsynth who are curious about how it works and how it fits into the current (October 2020) landscape of machine learning (ML) technologies. Does it need lots of training data? Can it separate full instruments? Is it a neural network? Is it AI? With this article, I hope to shed some light on those matters.

I released Factorsynth as a commercial Max For Live device a bit more than two years ago. It can be roughly described as a software tool that breaks sounds into pieces, and lets you modify and recombine those pieces to modify the original sound or create new ones. These pieces have a certain degree of structure (they can be notes, impulses, drum hits, transients, motifs, repeated patterns…) and, in contrast with samplers or tools based on granular synthesis, they are not just slices of the input, but layers that can overlap, both in time and in frequency, in the original sound.

Enter the matrix

In the documentation accompanying Factorsynth and on my webpage, I often write that Factorsynth extracts those elements using something called matrix factorization. In all fairness, just saying “matrix factorization” doesn’t mean a lot. There are a lot of algorithms, both in maths and in ML, that can be described as performing some kind of matrix factorization or decomposition. Such diverse tasks as solving systems of equations, compressing data, visualizing data, recommending Netflix movies and categorizing Scotch whiskies share one thing in common: they can be framed as matrix factorization problems. So, I rather use “matrix factorization” as a vague shorthand for the (peculiar and slightly boring) name of the actual algorithm Factorsynth is based on: Non-negative Matrix Factorization (NMF).

I won’t get into the details of how NMF works, or why the funny name (that alone deserves another article), but suffice it to say, for now, that it is an unsupervised learning algorithm that identifies patterns in data. Let’s delve a bit deeper into what that means.

I’ll start with the learning part. In the context of ML, learning means that the computer solves a task by observing a set of data, instead of by following a fixed set of instructions written by a programmer. Imagine we wish to extract a kick drum hit from a mix. A non-ML way to do it would be to manually set the typical frequency range and profile of a kick drum, and its typical decay rate, detect some onsets in the mix, and filter out the hits that correspond to those features. The ML approach is to present the computer with a bunch of isolated kick drum examples plus a set of mixes, and let it figure out by itself how to separate them. You can guess that the ML version is much more versatile. The set of examples presented to the computer is what we call a training database. Internally, the system creates a model from this data, which contains the automatically extracted rules.

Now there are two different classes of methods in ML: supervised and unsupervised learning. The former means that the database presented to the computer has to be manually organized in some way or another prior to learning. The example I just mentioned is supervised: we need to separate the database into mixes and isolated drum hits. Another example: if we wish to train a ML image classifier, we need to present to the computer, separately, exemplars of the different classes we wish to classify (e.g. houses, cars, trees etc.).

In unsupervised learning, there is no such preliminary database organization: we just present a bunch of unorganized, unlabelled data. There are two important subtypes of unsupervised learning approaches. In clustering, we expect the system to group the database elements into distinct, but internally-similar classes. In representation learning, also called feature learning, the algorithm aims at extracting unknown patterns from the database. As you might have guessed, Factorsynth falls into this second category.

Among the factorization methods aimed at feature learning, NMF has two distinct properties that make it especially powerful. First, the patterns it extracts are actually layers that can be added together to obtain a close approximation of the original data. And second, each extracted pattern is, by itself, an object with a plausible physical interpretation. In other words, NMF models data as a superposition of interpretable objects. This is what allows, in Factorsynth, to listen to the separate components as constituent sonic elements (a drum hit, a noise, a resonance, a collection of D-notes, a chord pad…).

This is also what made NMF the algorithm at the core of most successful sound unmixing systems until around 2015. Sound unmixing, also called source separation, when applied to music, attempts to separate the instruments or stems that make up a mix, such as removing the vocals, the drums, etc. It is an extraordinarily demanding task to design a system capable of automatically removing full instruments at production-level quality. NMF, by itself, is not capable of doing that. It is, as I said, at the core of many source separation systems, but it needs to be heavily extended with more complex versions of the algorithm that take into account further knowledge about the sources to be separated, or that rely on some form of supervised pre-training with large databases of isolated instruments.

The reason is that NMF, in its original unsupervised version, does not take any musical or acoustic assumptions, and therefore the elements it generates, while interpretable and often interesting, are unpredictable. The original idea that led me to the development of Factorsynth was to explore if the application of such unsupervised and unpredictable plain version of NMF was nonetheless compelling for sound design and music creation.

Thus, Factorsynth extracts elementary sounds from mixes, but we cannot know in advance what to expect, and in which order the elements will be extracted. Sometimes, we will find a separated hi-hat in the upper elements, sometimes a single chord played by a guitar will end up in its own component, and sometimes a single piano note will be split into three different components. In contrast, for a proper unmixing, the process must be predictable and targeted: we want the drums as the first component, the voice as the second, etc.

The fact that I framed Factorsynth away from a traditional source separation context allowed me also to twist the decomposition capabilities of NMF into doing some unorthodox things such as creating hybrids (cross-synthesizing) of components from two different sounds, or even cross-synthesizing elements from within the same sound. This kind of unusual processings, unrelated to a pure unmixing setting, is precisely what sets Factorsynth apart from other NMF-based systems.

As a completely unsupervised system, Factorsynth does not expect any particular set of instruments in the mix. There is no stored pre-trained model, nor any need to pre-train with a large set of isolated samples from different instruments. The input doesn’t even need to be a mix: Factorsynth will happily decompose a single instrument, or even single notes, down into its parts (attacks, transient noises, sustained resonances…). Its training database is the collection of tens or hundreds of consecutive spectral frames extracted from the input sound. Learning (factorization) takes only a few seconds if the input clip is less than one minute long.

The deep and the un-unmixable

I mentioned that NMF was the go-to choice for building source separation systems up until 2015. As far as ML technology is concerned, that is ancient history. So what happened afterwards? Since then, virtually all application areas of ML, source separation included, have been revolutionized by the arrival of deep neural networks (a.k.a. deep learning).

An artificial neural network is an ML model that, despite its fancy name, bares only a superficial resemblance to the workings of a human brain. Its foundation, the artificial neuron, is a set of mathematical operations only slightly more complicated than matrix multiplication. But the power of neural networks, and their impressive ability to solve ever increasingly demanding ML problems, arises when many neurons are combined into elaborate hierarchies. A single neural network can contain hundreds to thousands of neurons, which are usually organized into layers. Although there is no strict definition, a neural network is often said to be deep if it has more than three internal layers of neurons.

A single network can therefore entail thousands of matrix multiplications (among other operations), and training is then conceptually similar to having to perform thousands of matrix factorizations at once. It should come as no surprise that learning such complex systems requires much bigger training databases and much more computing resources. But if both data and resources are available, a carefully-designed neural network can obtain striking performances. In source separation, NMF-based systems have been recently as good as wiped out by deep learning systems. Quality is getting so good that source separation is starting to raise intellectual property questions related to the unmixed stems, and researchers have started to ponder how to make mixes impossible to unmix.

Prominent, freely-accessible neural unmixing systems include Spleeter and Demucs. It is important to note that those systems provide pre-trained models that are ready to use to quickly separate between a pre-defined set of stems (such as drums/voice/bass/others). In the ML jargon I just introduced, they are supervised. If you would like to separate a different instrument, you need to gather the database and train the network yourself, which requires access to a computer with one or several GPUs, or to a cloud computing service, and then patiently wait during several days of computation.

This shows how the workflows and goals in source separation systems and Factorsynth are rather contrary. In the first, focus is on quality to separate a given set of instruments, and no expense is spared in terms of model complexity, database acquisition and preparation, and computing resources in order to achieve it. Separation is quickly computed on the local computer, but learning is performed beforehand on powerful remote systems. In Factorsynth, on the other hand, focus is on flexibility, and both learning and separation happen, fast, on the local computer.

But there is a second, perhaps even more crucial reason why Factorsynth is not implemented as a neural network. I have already mentioned it: the interpretability of the components. The inner workings of deep neural networks are hard to interpret. In other words, it is hard to predict how manipulating the learned model will affect the output. This is hardly a setback in quality-focused source separation, where the model automatically does its job, once trained, without any user intervention. Again, this is opposite to the raison d’être of Factorsynth, which is precisely user-guided sound manipulation. In NMF, the components are directly interpretable as notes, motifs, and many other types of sonic objects, that is, objects that can actually be listened to.

To sum up: deep neural networks are undoubtedly the way to go for quality-focused, instrument-driven source separation, whereas factorization-based approaches are still relevant in applications requiring model interpretability and adaptability to very different kinds of mixes, or when little training data is available.

But is it AI?

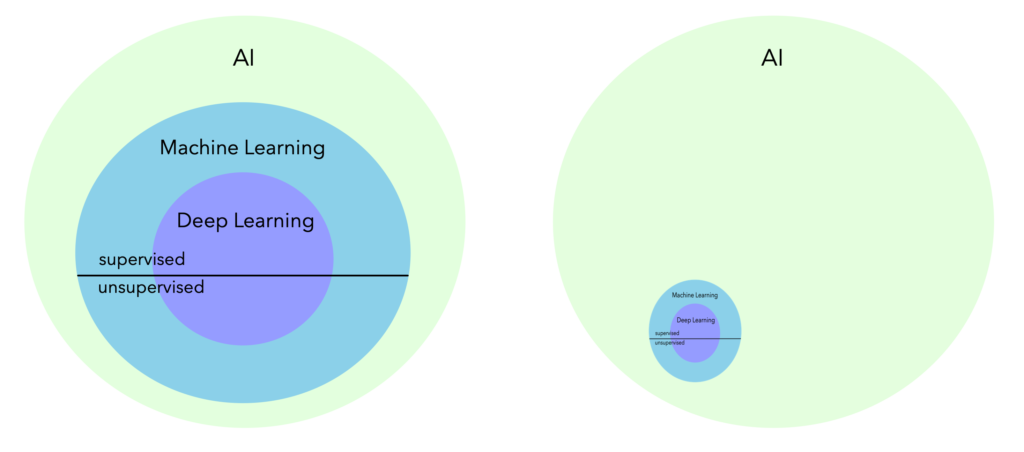

So, we have seen that Factorsynth is based on NMF, which belongs to a class of ML algorithms called unsupervised representation learning. But is it AI? What is the difference between Machine Learning and Artificial Intelligence anyway?

Often wrongly treated as synonyms, ML is in fact a subfield of AI, just as learning is only one of the many aspects of human intelligence. ML studies algorithms capable of extracting rules from data, but that is only just a tiny step in the enormous endeavour of simulating intelligence with computers; we don’t even agree on what intelligence actually means in the first place. In spite of the abuse of the catchy AI term pervading press and company pitches alike, we are decades away from computers with a human level of intelligence, and even the feasibility of such a feat remains highly speculative.

So in theory yes, all ML is AI, insofar as it is a subdomain of it. But systematically putting the AI label to every ML algorithm, including neural networks, is a bit like replacing your floor tiles and saying you’re doing architecture: technically true, but somewhat overdone. It incurs in the risk of bringing about a general loss of credibility to the field, which is exactly what caused the two previous bursts of the AI bubble (in the early 70s and early 90s). That’s why I prefer using the term ML for now, and also why I would make a lousy director of marketing.

The scary algorithm

One final word about the role Factorsynth plays in the composer’s or sound designer’s workflow. The device puts ML to use in the analysis part (factorization), not in the synthesis part. The sound manipulation and resynthesis part is fully user-driven. There are some randomized operations available, but they are not controlled by any ML process, they act like some trial-and-error shortcuts. The system creates a palette of sound material at the disposal of the composer, and then steps back and does not interfere any further in the creative process.

ML, let alone AI, are still in their infancy. Human-grade machine creativity lies so far into the future (if even possible), that the sometimes sinister public perception of those technologies is largely unjustified. ML is no more and no less than a tool that assists composers and performers and yes, it can also influence the musical discourse. Just like any other music technology has, present or past.

Let’s wrap up with the key points I hope this article helped clarify:

- Factorsynth is based on Non-negative Matrix Factorization (NMF), an unsupervised machine learning algorithm that decomposes data as superpositions of physically interpretable objects.

- Factorsynth is not a source separation system. It is not intended to separate full instruments, but to extract unpredictable but interesting sound elements with a certain degree of structure (notes, drum hits, rhythmical or melodic motifs).

- It does not rely on pre-training with large databases, nor does it contain any instrument model. It is completely “agnostic” to the type of mix it observes.

- Deep learning (neural networks) are the unquestionable state of the art in source separation, but they lack the interpretability and ability to work with little data required by the sound design workflow Factorsynth addresses.